To automate and secure machine learning operations, one needs to know about programming with SageMaker SDK. While algorithms are data scientists’ wheelhouse, infrastructure engineers like myself need to understand the skeleton of the code in order to orchestrate the activities, optimize performances, and secure the communications.

It was overwhelming to begin with. Programming in machine learning heavily depends on specific frameworks, SDKs, and is highly experimental. The lines of code that performs training, or inference, can be very resource demanding, which further complicates the operation. However, most of the tutorials target data scientist aspirant and lack the perspective of operations.

In this post I’ll go over what I’ve learned about training and inference workload. I will not focus on specific algorithms. Instead, I’ll use the most easy-to-understand algorithm, solving a typical problem. I’ll also use a simple framework (SK Learn) but try to reveal what is common amongst other supported frameworks.

Frameworks and Data

Amongst many programming frameworks, I need one that is generic, beginner friendly and compatible with SageMaker AI. The candidates are:

- Scikit-learn: good for classical machine learning activities;

- TensorFlow and PyTorch: industry standards supporting a wide range of tasks; from simple models to advanced deep learning applications;

- Hugging Face, PyTorch and TensorFlow can handle NLPs;

- XGBoost excels at handling tabular data

The use case statements above might be over-simplifications but at least Scikit-learn is a solid choice for beginners. It’s also good to keep Pytorch and TensorFlow in mind. As to the machine learning problem, I want to go easy with a simple classification problem with Logistic Regression. Despite the word “regression” in the name, logistic regression is an algorithm to solve classification problems (whereas linear regression solves a regression problem).

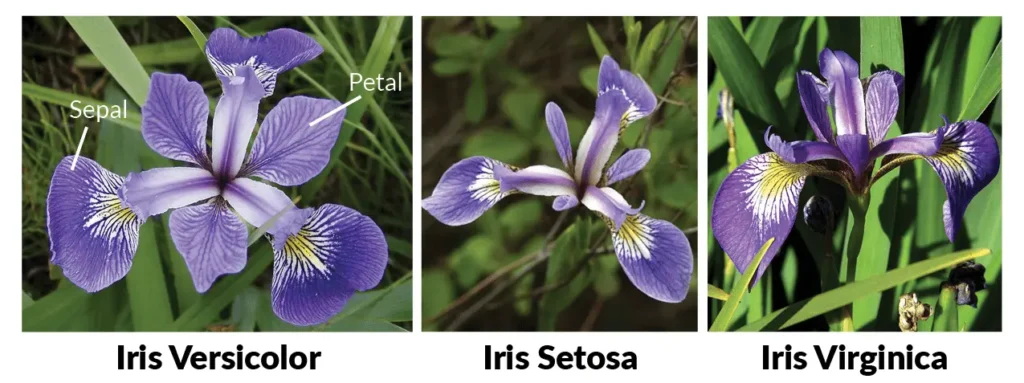

I use the simplistic iris flower data set to solve the classification problem: identifying the species (setosa, versicolor, or virginica) based on the length and width of sepal and petal. The dataset is very clean and does not reflect real-world messiness, making it a good for testing machine learn concepts, although not for training in production. The input is four numbers. The prediction output is a number (0,1, or 2) representing the species identified.

Training and Inference

Machine Learning concerns with indeterministic results. We first train the models with input data along with known results. Once trained, we can store the model as artifact with version control. Then we serve the model so we can feed it with unseen data and use the output as predictions. This machine learning flow has a lot in common with SDLC. Training resembles building of an application, the output artifact is the trained model, which we store as flat file or tarballs. In a model lifecycle, we track versions of trained models, quality control the models, decomission them and so forth. To perform inference, we serve the model in different ways, such that consuming application can use it for predictions.

To get started, let’s look at the following simple snippet, where we load data, train a simple model and use it for inference.

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# Step 1: Load the Iris dataset

data = load_iris()

X = data.data # Features (sepal length, sepal width, etc.)

y = data.target # Target (species labels)

# Step 2: Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Step 3: Create and train a logistic regression model

model = LogisticRegression(max_iter=200)

model.fit(X_train, y_train)

# Step 4: Predict using the test set

y_pred = model.predict(X_test)

# Step 5: Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f"Model accuracy: {accuracy * 100:.2f}%")

Note that the snippet does not use any SageMaker SDK libraries yet. The example is simple enough to run anywhere. In real life however, the invocations of .fit() method and sometimes .predict() method can be resource-demanding. In such experiments, even a simple change to the hyper-parameter may dramatically increase the resource requirement. This experimental nature calls for a mechanism to allow execution of certain lines of code, in a different runtime environment. The SageMaker SDK provides two mechanisms: a @remote decorator and a RemoteExecutor class. Take the decorator as an example, it employs Python’s decorator to implement a wrapper of the function in the code so that the function can execute remotely on a different machine. The code looks like this:

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# Step 1: Load the Iris dataset

data = load_iris()

X = data.data # Features (sepal length, sepal width, etc.)

y = data.target # Target (species labels)

# Step 2: Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Step 3: Create and train a logistic regression model: put the action in a function and mark it to execute remotely

from sagemaker.remote_function import remote

@remote(instance_type="ml.m5.large")

def train(X_train,y_train):

model = LogisticRegression(max_iter=200)

model.fit(X_train, y_train)

return model

model=train(X_train, y_train)

# Step 4: Predict using the test set: put the action in a function and mark it to execute remotely

@remote(instance_type="ml.m5.large")

def predict(model):

return model.predict(X_test)

y_pred = predict(model)

# Step 6: Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

print(f"Model accuracy: {accuracy * 100:.2f}%")

Note that this snippet requires a SageMaker SDK library (sagemaker.remote_function) and must run on a Jupyter Lab notebook. That comes with the flexibility to run any function on any type of instance allowed in the SageMaker domain. When a function runs remotely from Jupyter workspace notebook, SageMaker has to spend time provisioning and bootstrapping an EC2 instance, which usually takes a noticeable delay and the stdout gives a summary of billable seconds. SageMaker also capture these remote executions as training job uniformly.

SageMaker SDK Abstractions

We can summarize the activity pattern from the two example scripts as below:

This pattern however, does not fully integrate with other SageMaker AI capabilities. In a full-blown integration, we use the abstraction classes in SageMaker SDK, so that the platform has insights into our activities in the script, i.e. when we’re training models, building pipelines, running inference, etc. This allows SageMaker AI platform to manage these activities. The verb manage represents many specific operations to keep track of outside of the Python scripting runtime. For example, we can keep the training runs, even after the Jupyter workspace runtime is stopped or deleted.

We have to know the abstraction classes for different frameworks. They all follow the same pattern regardless of the framework name. Here is a diagram of the relations between the base classes in SageMaker SDK:

In this programming model, the users offload activities like training and loading a model to a separate Python script called entry point script. Then the users reference the script in the constructor of the estimator. The platform requires the script to follow a particular pattern. For example, to load a model, the function named model_fn() must exist. The training code goes under main() function. A simple entry point script looks like this:

# entry_point.py script for training and loading models on SageMaker AI

import argparse

import joblib

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import os

def main(args):

# Load data

df = pd.read_csv(args.train)

X = df.drop(columns='species')

y = df['species']

# Train-test split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Train the logistic regression model

model = LogisticRegression(max_iter=200)

model.fit(X_train, y_train)

# Evaluate the model

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f'Model accuracy: {accuracy * 100:.2f}%')

# Save the model to a file

joblib.dump(model, '/opt/ml/model/model.joblib')

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--train', type=str, default='/opt/ml/input/data/train/iris_train.csv')

args = parser.parse_args()

main(args)

# function to load a model

# https://sagemaker.readthedocs.io/en/stable/frameworks/sklearn/using_sklearn.html#load-a-model

def model_fn(model_dir):

"""Load the trained model from the model_dir"""

model_path = os.path.join(model_dir, "model.joblib")

return joblib.load(model_path)

This script takes an argument for the location of the training data. The main function performs the model training. Let’s name this script entry_point.py and in the next couple sections we can discuss two patterns to perform inference in a scalable fashion: Batch Transform and Inference Endpoint.

Batch Transform

Training is an expensive activity that we cannot afford to repeat upon receiving every inference requests. That’d be too slow. To serve the model for inference request in a scalable way, we hold the inference requests in batches, and sometimes in the form of a multi-line input file from S3 bucket. Below is an example:

# Import necessary libraries for SageMaker

import sagemaker

from sagemaker import get_execution_role

from sklearn.datasets import load_iris

import pandas as pd

import os

import joblib

# Get the execution role for the SageMaker notebook

role = get_execution_role()

########################################################

import boto3

from sagemaker.inputs import TrainingInput

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Create a DataFrame to easily export

df = pd.DataFrame(X, columns=iris.feature_names)

df['species'] = y

# Save dataset to a CSV file and upload to S3 bucket

train_file = 'iris_train.csv'

df.to_csv(train_file, index=False)

s3_bucket = 'sagemaker-ca-central-1-train' # Make sure the bucket exists

s3_data_path = f's3://{s3_bucket}/iris/'

s3 = boto3.client('s3')

s3.upload_file(train_file, s3_bucket, 'iris/iris_train.csv')

# Define the location of training data in S3

s3_input_train = TrainingInput(s3_data=s3_data_path, content_type='csv')

########################################################

# Define the SKlearn Estimator and start training the model

from sagemaker.sklearn import SKLearn

sklearn_estimator = SKLearn(

entry_point='train.py', # Your script

role=role,

instance_type='ml.m5.large', # Choose an appropriate instance

instance_count=1,

framework_version='0.23-1', # Use a compatible version of sklearn

base_job_name='sklearn-iris-job',

hyperparameters={},

source_dir='.', # If using any other source code files, include them here

)

# Start training the model

sklearn_estimator.fit({'train': s3_input_train})

########################################################

# Preparing inference input file and output directory

input_file='batch_input.csv'

pd.DataFrame(X).to_csv(input_file, header=False, index=False)

# Upload the CSV file to S3

s3_bucket = 'sagemaker-ca-central-1-train' # Make sure you replace with your bucket

s3_data_path = f's3://{s3_bucket}/iris_input/'

s3 = boto3.client('s3')

s3.upload_file(input_file, s3_bucket, 'iris_input/batch_input.csv')

s3_output_path = f's3://{s3_bucket}/iris_ouput/'

########################################################

transformer = sklearn_estimator.transformer(

instance_count=1,

instance_type='ml.m5.large',

output_path=s3_output_path,

strategy='MultiRecord',

assemble_with='Line',

accept='text/csv',

)

transformer.transform(

data=f'{s3_data_path}{input_file}',

content_type='text/csv',

split_type='Line',

input_filter="$[0,1,2,3]"

)

transformer.wait()

########################################################

We specify the instance size while creating the estimator with constructor. The later transform step takes long and requires provisioning of a computing resource. After the transformer run, the result is in the S3 bucket in the output directory.

Inference Endpoint

If inference requests come in at arbitrary times and expect timely responses, it makes sense to introduce client-server architecture. We use web service framework to host trained models behind a passively-open inference endpoint, allowing client applications to make REST API calls with inference input in the payload, and get inference output in the HTTP response. Essentially, we serve the model behind web servers.

In SageMaker AI, we can set up inference endpoint in three ways. The most common is real-time inference endpoint. We can configure a fleet of web servers in an autoscaling group to serve the inference requests. Below is an example:

# Import necessary libraries for SageMaker

import sagemaker

from sagemaker import get_execution_role

from sklearn.datasets import load_iris

import pandas as pd

import os

import joblib

# Get the execution role for the SageMaker notebook

role = get_execution_role()

########################################################

import boto3

from sagemaker.inputs import TrainingInput

# Load the Iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Create a DataFrame to easily export

df = pd.DataFrame(X, columns=iris.feature_names)

df['species'] = y

# Save dataset to a CSV file and upload to S3 bucket

train_file = 'iris_train.csv'

df.to_csv(train_file, index=False)

s3_bucket = 'sagemaker-ca-central-1-train' # Make sure you replace with your bucket

s3_data_path = f's3://{s3_bucket}/iris/'

s3 = boto3.client('s3')

s3.upload_file(train_file, s3_bucket, 'iris/iris_train.csv')

# Define the location of your training data in S3

s3_input_train = TrainingInput(s3_data=s3_data_path, content_type='csv')

########################################################

# Define the SKlearn Estimator and start training the model

from sagemaker.sklearn import SKLearn

sklearn_estimator = SKLearn(

entry_point='train.py', # Your script

role=role,

instance_type='ml.m5.large', # Choose an appropriate instance

instance_count=1,

framework_version='0.23-1', # Use a compatible version of sklearn

base_job_name='sklearn-iris-job',

hyperparameters={},

source_dir='.', # If using any other source code files, include them here

)

# Start training the model

sklearn_estimator.fit({'train': s3_input_train})

########################################################

# Create a model based on training result

model = sklearn_estimator.create_model()

predictor = model.deploy(

role=role,

initial_instance_count=1,

instance_type='ml.m5.large',

endpoint_name='TESTENDPOINT1',

wait=True,

)

########################################################

# Make predictions with the deployed model

test_data = [[5.1, 3.5, 1.4, 0.2]] # Example test data (sepal length, width, petal length, petal width)

prediction = predictor.predict(test_data)

print('Prediction: ', prediction)

# Make predictions with the deployed model again

test_data_2 = [[6.7, 3.1, 5.6, 2.4]] # Example test data (sepal length, width, petal length, petal width)

prediction_2 = predictor.predict(test_data_2)

print('Prediction_2: ', prediction_2)

# Clean up by deleting the endpoint when done

predictor.delete_endpoint()

The real-time inference endpoint is backed by specialized hardware and remains up all the time. That is expensive, especially if inference requests are only sporadic and can tolerate latency. To lower the cost, SageMaker AI platform supports two variants of inference: serverless and asynchronous inferences. The former stems from the (psudo-)concept of serverless computing, with the ability to scale to zero when idle. The latter uses a queue to hold up requests until a meaningful cumulation of jobs. Then it notifies the requestor of the processing results.

To use asynchronous inference, you specify the serverless_inference_config parameter when creating a model. To use asynchronous inference, you need to use the AsyncPredictor class. Whether every model (self-trained, JumpStart, etc) can work with all three patterns of inference, has to be reviewed case by base.

Summary

Most of the machine learning training materials were created by data scientist focusing on the models and algorithms. For infrastructure engineering like myself to learn the topic, I need to understand different ways that one can perform training and inference without getting swapped into the algorithmic details. In this post I took some simplistic examples to look at what training and inference looks like, as local script and as integrated with SageMaker AI platform using its SDKs, to familiarize myself with MLOps operations.