Update: also read my other article here on the different generations of ingress technologies.

In my Korthweb project I was researching for the best ingress mechanism for HTTP and TCP workload, both of which need to be secured. I started with Kubernetes Ingress but eventually decided to go with Istio Gateway. This blog post is about the justification. In this essay, I will make the distinction between Ingress and Gateway and explain why a Kubernetes platform needs the latter going forward.

The word ingress can be used either to indicate ingress resource (in conjunction with ingress controller) in the context of Kubernetes cluster, or more generally to indicate the technology (e.g. provided by service mesh) to direct north-south traffic, originated from the outside into the workload running within the cluster. I will use both meanings of the word ingress throughout the article.

Background

In my previous post, I discussed Kubernetes Service object and native Ingress. In short, a Service object addresses the problem of exposing a workload (Pod or Deployment), operating at layer 3-4. A ClusterIP type of service exposes workload within the cluster only, and therefore is only used by internal services that are consumed by workload in the same cluster. A NodePort type of service exposes workload to outside of the cluster, using the node’s IP address and port range available on the node, a great step forward but still quite inconvenient and subject to the limitation of using Node’s IP and Port. A LoadBalancer type of Service brings separate IP address and port for running a service. To back up this type of service, the platform has to provide a load balancer with its own IP address and port range to manage. The implementation is platform specific e.g. Metal LB for Minikube, Azure Load Balancer for AKS, NLB for AWS EKS. In this type of service, Node Ports still exists but are not exposed to the outside world. Instead, they are only exposed to the backend of Load Balancer, whose front-end port is exposed to the outside world. In both NodePort and LoadBalancer types of Service, the kube-proxy process plays a role on each node to direct traffic into NodePort to target Pods.

Kubernetes Ingress, on the other hand, targets issues above layer 4, for example, path-based routing and is therefore mostly used for HTTP and HTTPS traffic. It is implemented by an Ingress Controller and now there has been a diverse ecosystem of those ingress controllers. In many implementations (e.g. Azure Application Gateway Ingress Controller, AGIC), the ingress themselves usually come with a load balancer managed by themselves. This eliminates the need for a separate LoadBalancer type of Service just for L4 capability. As a result, Ingress (with both L4 and L7 capabilities) is usually deployed along with ClusterIP type Service. Here is an example. The declaration of the Ingress in this case usually contains numerous lines of annotations in order to communicate the specification to the implementation.

Requirement

Using Kubernetes Ingress along with Service of ClusterIP type seems perfect to address the majority of use cases. However, it has its blind spots. My Korthweb project deals with DICOM traffic (a protocol on top of TCP) over TLS as well as HTTP traffic, which can be broken down as:

- The ability to proxy TCP traffic over an arbitrary TCP port

- The ability to terminate TLS/SSL encryption for TCP traffic

The Ingress documentation of Kubernetes clearly states that: an Ingress does not expose arbitrary ports or protocols. Exposing services other than HTTP and HTTPS to the internet typically uses a service of type Service.Type=NodePort or Service.Type=LoadBalancer.

The stance of community-driven Nginx ingress controller can be found in the documentation, which suggests the use of Service object for arbitrary TCP port. There is some unofficial claims of workaround available but I’m not confident.

I also went through a number of other Ingress providers but to my disappointment, the only product that supports it seems to be Traefik:

| Product | Requirement #1 | Requirement #2 |

| F5 driven Nginx Ingress Controller | Supported | There have also been requests for TLS termination for TCP traffic but the request has not been closed as of yet. |

| HA Proxy Ingress controller | Supported | There’s no mention of this in documentation. |

| Kong’s Kubernetes Controller (with TCPIngress CRD) | Supported | Documentation claims support through SNI-based routing. However, the example demonstrates it using self-signed certificate only. BYO cert not supported according to this GitHub issue. |

| Traefik Lab’s IngressRoute (with IngressRouteTCP CRD) | Supported | Documentation seems to suggest that it is supported by IngressRoute. |

My general impression is that Req #2 isn’t very popular so the providers either don’t support it or delaying the implementation. Even if they do, the CRD used for TCP ingress is different than HTTP’s. For example with Traefik Lab, the CRD for TCP is IngressRouteTCP, and for HTTP it is IngressRoute.

Introduction to Gateway

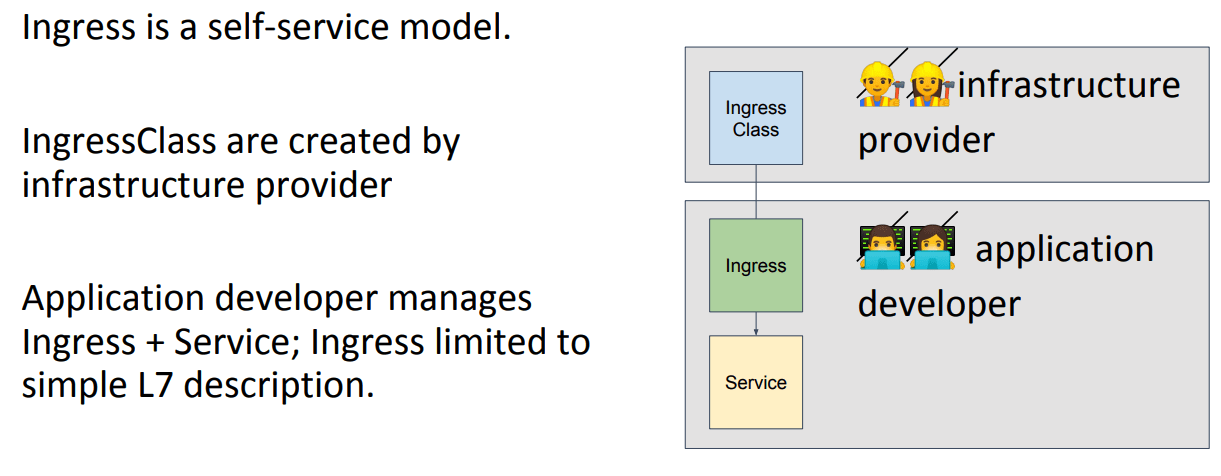

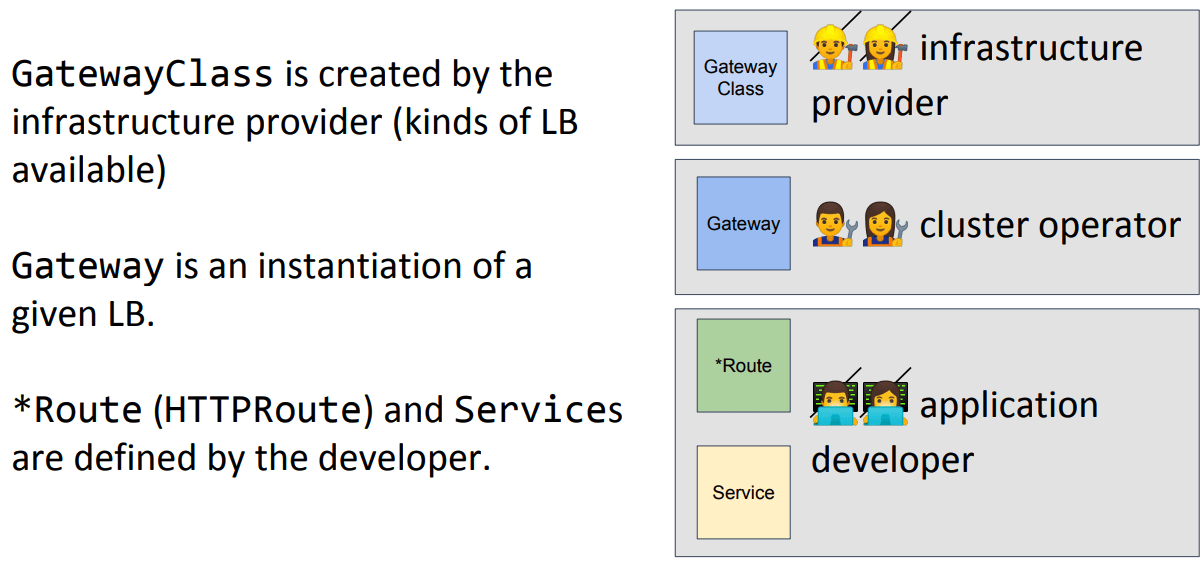

In addition to functional shortages, according to this blog post, there are signs of fragmentation into different but strikingly similar CRDs and overloaded annotations. In Kubecon 2019, a group of contributors discussed the evolution of Ingress into Gateway. Below are the two key slides stolen from their presentation:

This sums up how the concept of Gateway is different from Ingress. Gateway is an instantiation of a given LB. It works along with Route to achieve the functions brought by an Ingress. Gateway as a resource type of its own makes management easier at L4-L6 by a separate team. Routing at L7 is offloaded to “Route” resource type.

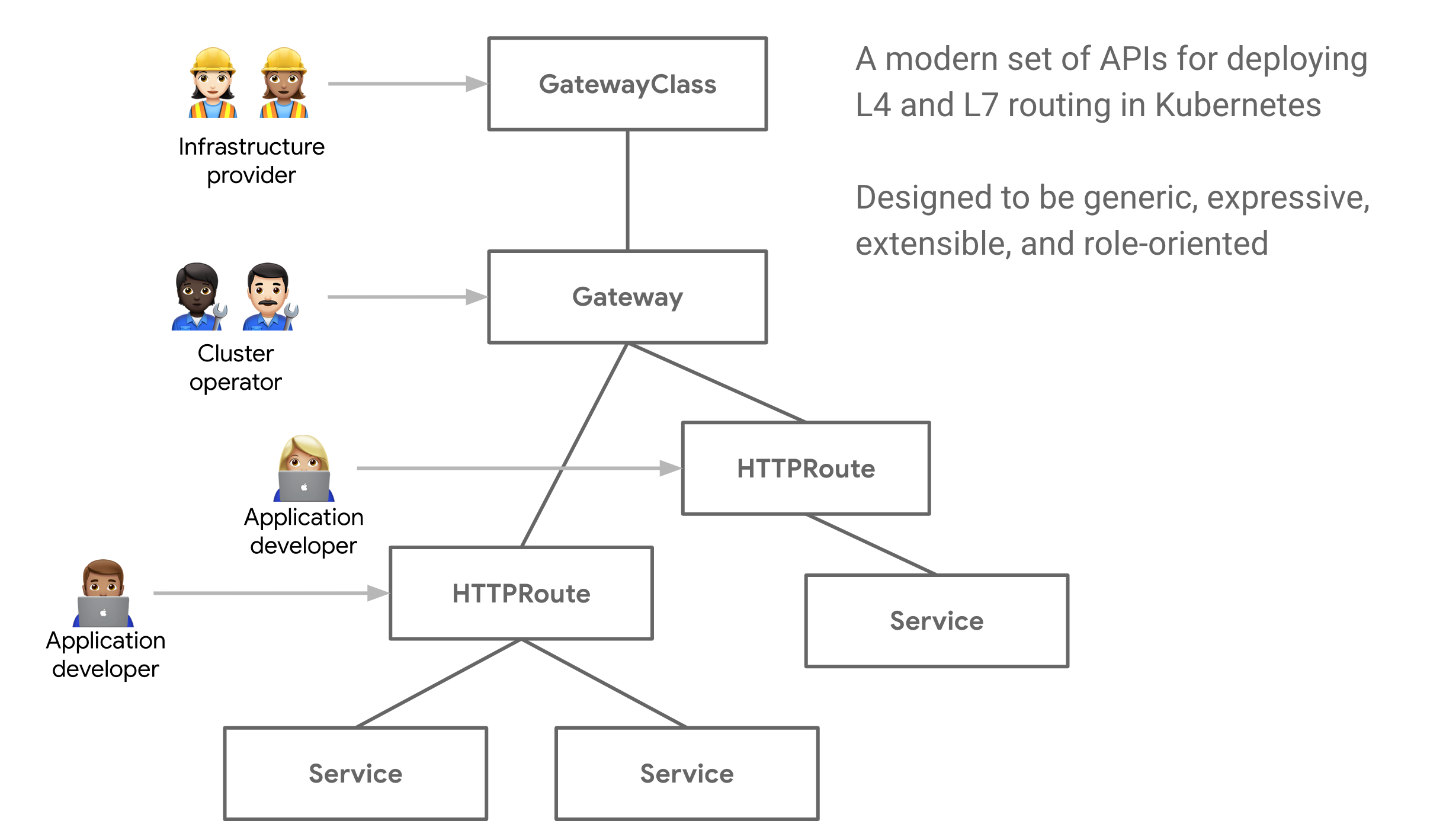

This conceptual evolution gives rise to the Gateway API open source project managed by the SIG-NETWORK community. Gateway API is a collection of resources that model service networking in Kubernetes, including GatewayClass, Gateway, HTTPRoute, TCPRoute, Service etc. The aim of this initiative is to evolve Kubernetes service networking through expressive, extensive and role-oriented interfaces that are implemented by many vendors and have broad industry support.

The diagram above stolen from Gateway API website illustrate the management model for each resource.

Kubernetes Gateway Implementations

The Gateway API is still a fairly young initiative and all the reference implementations are either work-in-progress or in early stages. In the last section, we learned that in the journey from Ingress to Gateway, the standardization initiative comes a bit behind the implementation efforts. For many supporters, the natural strategy is to continue with existing proprietary ingress controller implementation, and retrofit their technology to the emerging Gateway API along the way.

Because of that, we can see many market players with multiple flavours of implementations, typically one that evolves from their original product offering, with higher adoption rate and maturity level, and one that conforms to Gateway API specification.

For example, Traefik Labs has its Gateway API implementation in experimental stage. Its own implementation consists of (standard) Kubernetes Ingress and Kubernetes IngressRoute (based on custom resource with support of advanced features such as TCP route). Another example is the HAProxy Ingress, a community driven ingress controller implementation for HAProxy. Starting from its version 0.13, it partially supports the Gateway API’s v1alpha1 specification. Here is the conformance statement. Contour as a CNCF project for ingress controller has support for Gateway API at alpha version. Kong’s Kubernetes Ingress Controller follows the same path.

The Gateway functionality is also provided as part of Service Mesh product offering, and we can see some reference implementation by service mesh providers. Istio has its own Gateway implementation but tries to adapt to Kubernetes Gateway API. Hashicorp Consul also claims to be building support for Kubernetes Gateway API.

Until the Gateway API project matures, it is not recommended to use Gateway implementations that conforms to it, unless you intend to be their Guinea Pig. For my project, I chose Istio Gateway.

Istio Gateway implementation

As discussed, when the Gateway standard is still in its infancy, I chose a non-standard implementation of Gateway, even though it may include some CRDs. Out of the many Gateway implementations, I choose Istio mainly for two reasons.

First, I need a Gateway as part of service mesh because service mesh provides many other features that are needed in the platform. One of the goals of Service Mesh is to provide commonly used features (traffic management, observability, security, extensibility) in a commodity layer on top of Kubernetes. Gateway is not the only thing I need out of this commodity layer. With one install of Istio, many common platform-level problems are also addressed (e.g. mTLS, traceability, etc). I’ve compared three major service mesh technologies as below:

| Linkderd | Istio | Consul |

|---|---|---|

| – lightweight – uses linkerd2-proxy – the original service mesh – no Gateway implementation (deal breaker in my use case) | – feature rich – uses Envoy proxy – complex but getting better | – initially a service discovery and distributed key-value store – lacks observability features but getting better – uses Envoy proxy |

It is worth-noting that there are some initiatives to standardize service mesh (e.g. SMI) with reference implementation such as OpenServiceMesh. The standard is too weak to be considered important for now compared with maturity and stability. Istio has strong community support. The risk with Istio to acknowledge, is that it is not following an open-governance model (unlike many other CNCF projects), which could potentially causes vendor lock-in.

Second, The Istio Gateway features the separation between Gateway and Virtual Service CRDs. The former defines entry point and the latter defines routing rules. This design separates entry points from routing rules, enabling the flexibility of reusing the same Virtual Service for different gateways.

Istio uses Envoy proxy to manage traffic between Pods. Unlike the kube-proxy pattern, the Envoy proxies are side-car containers centrally managed by Istio Control Plane, which also enables other features such as traceability, as illustrated in the diagram below (stolen from this post).

In addition to Virtual Service, Istio Gateway also has the concept of Destination Rules. Virtual Service defines how to route traffic to different destinations. Destination rule defines how to split traffic to different subset at the routing destination. The concepts are documented on this page. For HTTP traffic, the relevant entities can be represented in the diagram below:

Here is an example of using Gateway and Virtual Service resources (from Korthweb sample project). Also note that in the Istio literature, there is neither a CRD name called “Ingress”, nor a resource type named “Ingress Gateway”, although they may be loosely used to refer to “Gateway resources configured to manage ingress traffic”.

Istio Gateway Installation

There are more than one ways to install Istio. In the past, Istio Operator was used to install Istio. The Operator calls IstioOperator API. Today the use of Istio Operator is not recommended anymore but the IstioOperator API is used implicitly by Istioctl installer. The two recommended approaches to install Istio today is by Istioctl and Helm.

With istioctl, you can specify an option imperatively, or using an overlay file as did in this lab. With Helm, istio has multiple charts, and requires multiple steps:

- install CRDs using the base chart

- install istiod using the istiod chart. Here is an example of values provided to Helm installer.

- install ingress gateway using the gateway chart. Here is an example of values provided to Helm installer for ingress gateway. Note that egress gateway also requires the same helm chart with different value definition.

This instruction contains how to manually install Istio gateways using Helm, including installation of multiple Helm charts. I attempted to create a single chart to consolidate the multiple charts required for istio. It was not successful because of an error when trying to reference the same gateway chart dependency for multiple times.