I regard AWS Systems Manager as omnipotent. Nonetheless, there are a few reasons that makes Ansible still a prevalent VM (EC2) management tool over Systems Manager (SSM). First, organizations already vested in their custom Ansible roles and playbooks want to reuse, and expand their assets in Ansible. The benefit is consistency in the VM management, over time, and across platforms (AWS, on-prem, Azure, etc). Even for AWS shops, in the last few years many enterprises have adopted AWS landing zone with the multiple AWS account prescriptive pattern. However AWS Systems Manager still lacks integration with AWS Organization (except for a few non-core capabilities). This creates the demand of managing EC2 instances across AWS accounts. In this post, we propose a secure method to manage a fleet of EC2 instances from multiple AWS accounts, using Systems Manager . It also enables connectivity from an Ansible control node.

Prerequisites

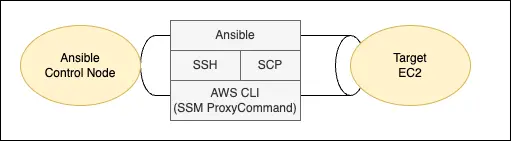

This proposal ties a few CLI tools together, including AWS CLI, SSH, Ansible, etc. It also requires the cloud engineer to understand how they work. I’ll start with the choice of the tools.

Above, I discussed the benefit of Ansible. Since Ansible operates on SSH, we’ll still have to use SSH tools. Even though SSM agent provides a way to connect to EC2 instance without requiring an RSA key pair, we still need SSH since it is a well-established industry standard (RFC4253) and the foundation of Ansible. These two technologies are not mutually exclusive. In fact, the SSM agent provides a secure enhancement to the operation with SSH. Traditionally, on each EC2 instance we’d have to run SSHD services which opens TCP port 22 (or alternative TCP port as configured). For authentication we favour key pair over password but the open port is still an attack surface vulnerable to brute force and DDoS attacks. For EC2 instances on private networks there is no reachability to the instance’s SSH port unless the bastion is also in a connected network.

As I cover in a post, the SSM Session Manager comes in handy. The SSM agent operates from the instance and communicate outbound to AWS backend. Since the SSM agent runs under a privileged user on the OS, you can perform OS-level commands through SSM. Further, AWS developed a Session Manager plugin with AWS CLI, allowing AWS CLI as a proxy command when making an SSH connection. Therefore SSM enables SSH connection without requiring port 22 to be open. In addition we’ll need to use RSA key pair as required for SSH, which is also an improvement to the security posture.

That explains the dependent tools. On the Ansible control node, apart from Ansible itself, we need the latest version of AWS CLI with the Session Manager plugin, we need to configure AWS CLI properly to connect to EC2 instances across multiple AWS accounts.

Configure AWS CLI

This section discusses how to configure AWS CLI. I have a couple of handy aliases for productivity but they are not essential. For example, I often need to check the IAM identity making the call, and I often need to list out all profiles configured. So I added the following two entries in the ~/.aws/cli/alias file:

[toplevel]

whoami = sts get-caller-identity --no-cli-pager --output yaml

profile = configure list-profilesWith that I have an alias to check IAM identity and available profiles. Then we can start configuring the profiles for CLI (in the file ~/.aws/config). Since we’ll be working with multiple AWS accounts, we have to manage multiple CLI profiles, which implies that:

- We better use the

--profileswitch to explicitly specify profile being used, instead of relying on theAWS_PROFILEenvironment variable; - As a security best practice, we should not configure profiles with long-term IAM credential in the config file;

- We must ensure the CLI doesn’t prompt for log-in every time we switch profile

To satisfy #3 there are many ways but we’ll discuss two: using cross-account IAM role, and using AWS SSO.

Bonus point if you enable auto-complete for AWS CLI.

Configure AWS CLI Profiles

With cross-account IAM role, the idea, is that the client start with one IAM identity, and use that IAM identity to assume roles on several other accounts. The configuration looks like this:

[profile jump_account]

credential_process = /opt/bin/awscreds-custom --username helen

[profile target_account_1]

role_arn = arn:aws:iam::123456789011:role/OrganizationAccountAccessRole

source_profile = jump_account

[profile target_account_2]

role_arn = arn:aws:iam::123456789012:role/OrganizationAccountAccessRole

source_profile = jump_accountIn this example, you start with an validated identity in the jump account, then assume a privileged IAM role named OrganizationAccountAccessRole on the target accounts. Typically such IAM roles are pre-configured (e.g. in an multi-account landing zone) with appropriate trust policy to allow principals from the jump account. Once you’re validated as the IAM identity in the jump account, then you can use profiles for target accounts without being prompted for credentials again.

If your have configured IAM Identity Center for the multi-account environment, consider an alternative approach using sso login. The configuration usually looks like this:

[sso-session sso]

sso_start_url = https://myorg.awsapps.com/start/

sso_region = us-east-1

sso_registration_scopes = sso:account:access

[profile target_account_1]

sso_session = sso

sso_account_id = 123456789011

sso_role_name = AWSAdministratorAccess

[profile target_account_2]

sso_session = sso

sso_account_id = 123456789012

sso_role_name = AWSAdministratorAccessThis is often used by human users with SSO credential. In this example, to authenticate the sso session, start with command “aws sso login” . Then you can use all profiles by specifying --profile switch without having to log in again.

Configure SSH to EC2 via SSM

The EC2 instance must connect to Systems Manager endpoint, before one can SSH to the instance using the plugin. Once connected, you should find the instance in Fleet Manager. For this to happen, there are a few conditions. First, the instance must be able to reach the endpoint, either via public Internet, or via VPC interface endpoints if in a private subnet. Second, the instance profile must contain an IAM role with appropriate permissions. We can use AWS managed policy AmazonSSMManagedInstanceCore in the role. In addition, if we record the SSM session to an S3 bucket with encryption the instance profile must have permission to use the encryption key.

SSM agent uses the IAM role. The agent runs as a service on Linux or Windows machines. Many AWS managed AMIs come with SSM agent pre-installed. If that is not the case, you’d install the agent in your own AMI, or in user data which requires downloading the installer. With these configuration you’d be able to connect to the instance via SSM. We can use AWS CLI SSM command, or AWS web console to start an SSH session. To use SSH CLI utility, we install the session manager plugin along with AWS CLI on the SSH client machine. We also specify a public key for EC2 instance and run ssh command with the private key. The SSH configuration needs a configuration such as:

host i-* mi-*

ProxyCommand sh -c "aws ssm start-session --target %h --document-name AWS-StartSSHSession --parameters 'portNumber=%p'"

User ec2-user

IdentityFile ~/.ssh/id_rsaWith this entry, you may directly SSH by instance ID (usually starting with i-* and mi-*), and the specified Proxy Command with SSM session document AWS-StartSSHSession will be invoked.

Configure Ansible Inventory

We can SSH to an instance (without port 22 open, on top of SSM) using the method above. Similarly, we can also configure Ansible to connect to the instance, without port 22. The inventory configuration looks like this:

mytest:

hosts:

instance1:

ansible_host: i-00aabbffcc7755221

ansible_user: ubuntu

ansible_ssh_common_args: -o ProxyCommand="aws ssm start-session --target %h --document-name AWS-StartSSHSession --profile target_account_1"

instance2:

ansible_host: i-eedd88ff66aa22442

ansible_user: ubuntu

ansible_ssh_common_args: -o StrictHostKeyChecking=no -o ProxyCommand="sh -c \"aws ssm start-session --target %h --document-name AWS-StartSSHSession --parameters 'portNumber=%p' --profile target_account_2 \""Note I used two patterns for ansible_ssh_common_args that are similar. Both works. Each entry references its own profile. This is necessary because Ansible does not have the knowledge which instance belongs to which profile’s account.

Another way to get Ansible connect to instances is to use the connection plugin community.aws.aws_ssm, by specifying ansible_connection: aws_ssm (e.g. as host variable) and other required variables (e.g. profile). This method does not require SSH channel but it requires an S3 bucket, and hence IAM permission on the controller node.

Ansible supports dynamic inventory, in three ways: inventory source file (with existing plugin), custom inventory plugin, and inventory script (in Python). Take source file as an example for EC2, add the followings as the content of aws_ec2.yaml:

plugin: amazon.aws.aws_ec2

# Attach the default AWS profile

aws_profile: target_account_1

compose:

ansible_host: instance_id

ansible_user: "'ubuntu'"

ansible_ssh_common_args: "'-o ProxyCommand=\"aws ssm start-session --target %h --document-name AWS-StartSSHSession --profile target_account_1 \"'"Then we can display the rendered inventory list, and Ansible-ping the instances.

ansible-inventory -i aws_ec2.yaml --list -y | less

ansible all -i aws_ec2.yaml -m pingFor more flexibility, for the composed variables, we could use jinja2 expression to generate the value. In both ways, we produce an inventory source per profile using the built-in inventory plugin aws_ec2. For greater flexibility, such as consolidating instances from all accounts into a single inventory, consider writing your own inventory script, or even own inventory plugin.

Conclusion

In this post we propose a way to manage instances across AWS accounts. Two main challenges are establishing the communication channel (SSH on top of SSM) and generating inventory data in Ansible. Some AWS services can generate inventory data, such as resource data sync in Systems Manager, or using AWS Config Aggregator. It is unfortunate that neither way produces the inventory data in a format that is directly compatible with Ansible inventory. Therefore, you might have to create a custom Ansible dynamic inventory script (in Python) that reads from the inventory data from AWS Config Aggregator (which supports AWS Organization). The side benefit of this script is that it is usually faster than the built-in aws_ec2 inventory plugin.